AI Valuation Multiples in Q1 2026: Dispersion Widens as Investors Reprice Quality

AI valuation multiples in Q1 2026 did not move in one clean direction.

They separated.

Across public comps, private rounds, and recent M&A, the market continued to pay up for a narrower profile of AI companies: those with clear monetization, repeatable demand, and unit economics that look better each quarter.

Everything else, especially teams still selling “growth now, business model later,” faced sharper discounts. That is why two AI companies can post similar headline growth and still land at very different valuation outcomes. Investors are pricing the quality of the revenue, not the excitement of the category.

Three factors show up again and again behind the dispersion:

Monetization clarity: revenue that is contracted, repeatable, and tied to a specific workflow tends to price materially better than usage that is hard to forecast or easy to churn.

Economics that improve with scale: gross margin profile, contribution margin, and the path to sustaining margins once compute is fully loaded have become central to underwriting.

Efficiency and durability: retention, expansion, and sales efficiency increasingly explain who gets premium multiples and who gets repriced.

This update unpacks what those signals look like across the AI stack, and where the repricing is most visible:

Core AI vs. Applied AI: capital and attention shifting toward distribution, vertical depth, and defensible routes to market

Agentic AI: momentum moving from narrative to early commercialization, with uneven proof across companies

Valuation and fundamentals: a tighter link between multiples and margin profile, retention, and efficient scaling

Cross market triangulation: how public comps, private rounds, and M&A together shape what investors treat as “bankable” in AI

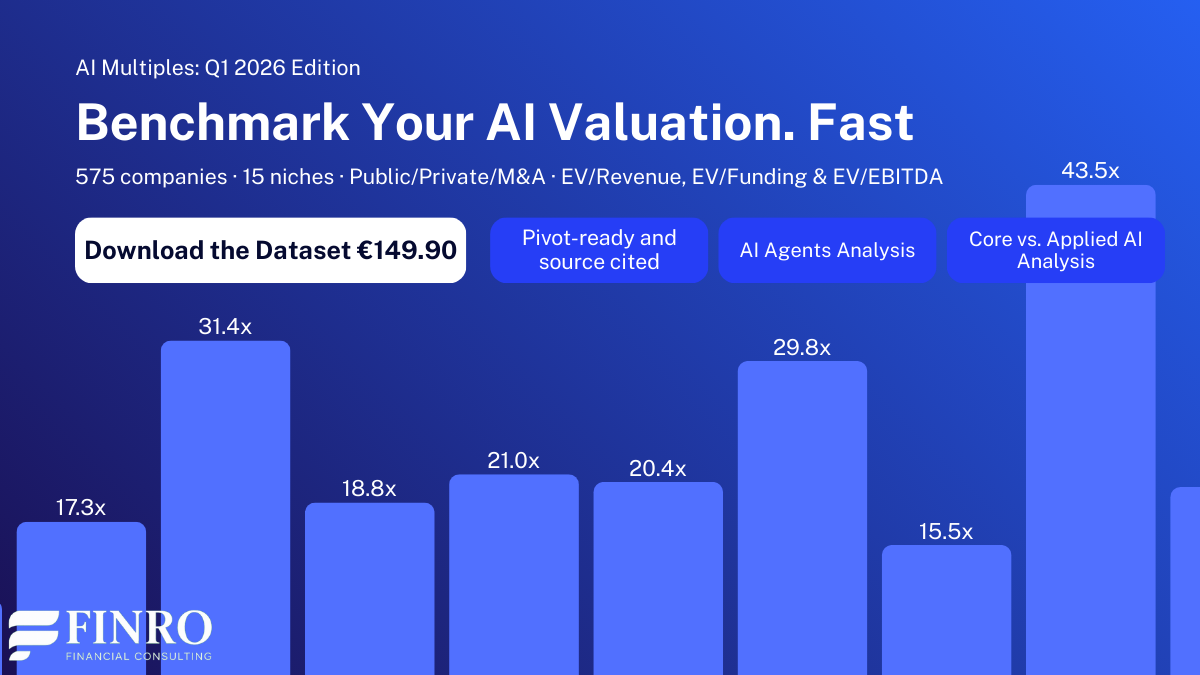

If you want the underlying comps and benchmarks behind the themes discussed here, Finro maintains a Q1 2026 AI Valuation Multiples dataset covering public companies, private rounds, and M&A across AI niches. I will include a link toward the end for anyone who wants the full reference set.

From here, we focus on the practical implication of the dispersion: what is being rewarded, what is being repriced, and what founders and investors can take away when they look past headline growth and into the mechanics that actually drive AI valuations.

AI multiples in Q1 2026 did not move together, they split. Premiums went to companies with repeatable monetization, durable demand, and improving unit economics, while “growth now, business model later” got repriced. Public comps anchor what is defensible, private rounds price scarcity-driven upside, and M&A enforces what is actually monetizable. Across the stack, core AI earns premiums when it becomes picks-and-shovels infrastructure, and applied AI earns premiums when it is embedded in budgeted workflows with measurable ROI and repeatable deployment.

- What actually widened dispersion in Q1 2026?

- Agentic AI moved from narrative to early monetization, with uneven proof

- Core AI vs. Applied AI: valuation follows distribution and monetization, not “model sophistication”

- What the market rewarded inside Core AI

- What the market rewarded inside Applied AI?

- The practical implication: “core vs. applied” is becoming a shorthand for underwriting certainty

- Where multiples diverge across AI segments?

- The Q1 shift: public repricing, M&A anchoring, private upside

- Download the Q1 2026 AI multiples dataset

- Summary: Dispersion is the signal

- Key Takeaways

- Answers to The Most Asked Questions

What actually widened dispersion in Q1 2026?

Dispersion did not widen because investors suddenly changed their view on “AI.” It widened because the market got stricter about what qualifies as underwritable performance.

In practice, Q1 2026 pricing looked less like a single AI multiple range and more like a set of separate lanes. Companies in the top lane were not just growing. They were showing that growth can persist without exponential burn, margin erosion, or fragile demand. Companies outside that lane were still able to point to momentum, but the market treated it as provisional.

Three mechanisms drove most of the spread.

Proof replaced promise as the primary underwriting input

Many AI companies can show impressive product velocity and headline growth, especially early. The repricing happened when investors started asking whether that growth is repeatable under real constraints.

The highest outcomes clustered around evidence that demand is durable and monetization is real, such as:

Clear willingness to pay, not just usage

Retention that holds once novelty fades

Expansion that comes from workflow value, not discounts or heavy services

Predictable sales motion, even if it is still maturing

The discounts clustered around patterns that still read like “learning mode,” such as:

Revenue that is tightly coupled to ongoing incentives or heavy customization

Demand that depends on a narrow set of pilots or one buyer archetype

Weak renewals masked by new logo growth

This is where the gap forms. Two companies can grow at similar rates, but the one that demonstrates repeatability earns a fundamentally different multiple.

This update synthesizes signals from Q1 2026 public-market comps, disclosed private rounds, and recent M&A benchmarks across the AI stack. The focus is on valuation dispersion and the operating attributes investors appear to reward (monetization clarity, durability of demand, and improving unit economics). Coverage is directional rather than exhaustive. Multiples can move quickly with sentiment, liquidity, and compute costs, so treat point-in-time benchmarks as context, not a pricing guarantee.

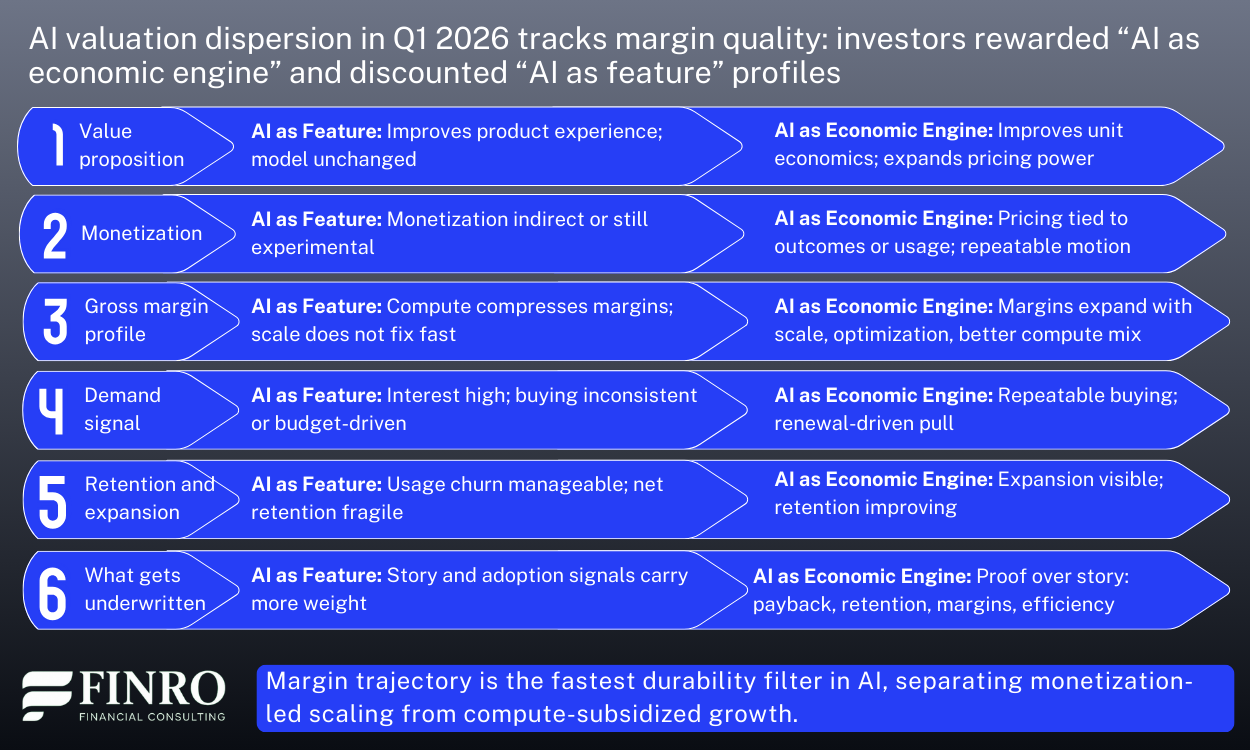

Margin quality and compute exposure started to matter more than category labels

In Q1 2026, the market continued to separate “AI as feature” from “AI as economic engine.” The question was not whether AI is inside the product. The question was whether AI improves the business model or strains it.

Companies with improving gross margin profiles, or at least a clear path to improvement, tended to be rewarded. Companies where revenue still looked partially subsidized by compute were treated more cautiously, even with strong growth.

That is not a moral judgment about using compute to grow. It is a recognition that the multiple is a bet on future cash generation. When unit economics are unclear, the multiple becomes a smaller bet.

Efficiency became a valuation lever again

The market’s tolerance for high burn did not disappear, but the bar for “good burn” rose. Q1 2026 multiples tended to widen most where growth efficiency diverged.

Investors were effectively asking:

Does every incremental dollar of revenue require proportional spend?

Are CAC and payback improving, stable, or deteriorating?

Is growth coming from scalable channels and repeatable sales cycles?

Does the business get easier to scale over time, or harder?

This is one reason the same growth rate can produce very different outcomes. Growth that gets cheaper to generate deserves a higher multiple than growth that gets more expensive.

The practical implication: dispersion is the market’s way of pricing execution risk

When investors reprice quality, they are really repricing execution risk. Q1 2026 multiples increasingly reflected how many “ifs” remain in the story.

Fewer “ifs” translates into higher valuation outcomes, even if growth is slightly slower.

More “ifs” translates into discounts, even if growth looks strong on paper.

This sets up the rest of the analysis. The remainder of the article breaks down where those “ifs” tend to concentrate across the AI stack, starting with the split between Core AI and Applied AI, and why defensible distribution and workflow ownership became more central to valuation outcomes in Q1 2026.

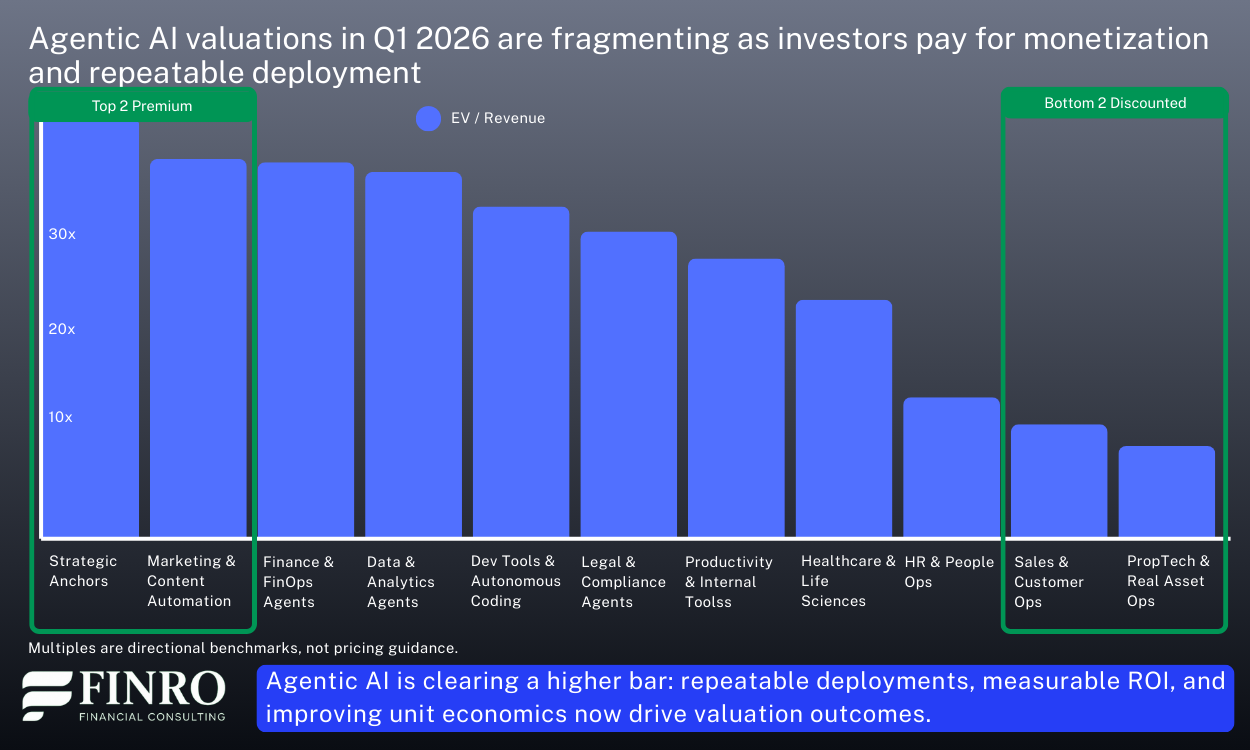

Agentic AI moved from narrative to early monetization, with uneven proof

Agentic AI was one of the loudest themes in Q1 2026, but the valuation signal was quieter and more discriminating. The market did not price “agents” as a category premium. It priced evidence that agents can be sold, deployed, and expanded without collapsing unit economics.

That distinction matters because agentic products sit at an awkward intersection of promise and cost. They can be sticky once embedded in workflows, but they can also be compute intensive, operationally complex, and harder to scope than classic SaaS. In Q1, the companies that held up best were the ones that treated “agentic” as a delivery mechanism, not the business model.

What investors started rewarding in agentic profiles

1) Clear packaging and billing, not open-ended usage

The cleanest outcomes came where pricing was anchored to a business unit that finance teams can approve and renew. Think seats plus tiered usage, or outcome-aligned metrics with guardrails. The opposite profile, broad “pay for tokens” narratives without tight controls, got discounted because buyers struggled to forecast spend and teams struggled to forecast margins.

2) Narrow workflows with repeatable deployment

The strongest commercial signal came from agents that do one job reliably, in a known environment, with an implementation path that can be replicated. Investors treated narrowness as a feature, not a limitation, because it translated into shorter cycles, lower services drag, and clearer post-sale expansion.

3) Proof of expansion inside existing accounts

In Q1, “pilot wins” mattered less than expansion motion. Investors leaned into questions like: Do deployed agents spread to adjacent teams? Do they take on additional tasks without heavy customization? Are renewals behavior-driven rather than champion-driven? Where the answers were credible, valuation outcomes improved even when headline revenue was not yet large.

4) A margin story that improves with learning and scale

Agentic products tend to start with a heavier compute and support footprint. The market showed more willingness to underwrite that if the company could explain why gross margin should expand, and show early evidence that it is happening. Examples include model routing, caching, better prompt and tool orchestration, and reducing human-in-the-loop over time. The key was trajectory.

Where the market stayed skeptical

1) “Agent as a demo” without a procurement narrative

Many products looked compelling in a controlled environment but struggled to translate into budgeted, renewable spend. When buyers treat the agent as experimental, the revenue tends to be fragile. Investors priced that fragility.

2) Implementation that looks like bespoke services

If each deployment requires heavy tailoring, deep integrations, or ongoing ops support, the agent starts to resemble a project business. That can still work, but it compresses multiples unless the company can show a credible path to standardization.

3) Margins obscured by compute volatility

Where compute costs were a meaningful swing factor and the company could not explain controls, unit economics became a reason to wait. In Q1 2026, “we will fix it later” did not clear the bar as often.

The takeaway for founders and investors

Agentic AI is not being valued for the word “agent.” It is being valued for repeatable deployment, controlled economics, and expansion inside accounts. The winners in Q1 looked less like general-purpose copilots and more like workflow machines with disciplined packaging.

If you are building in agentic AI, the fastest way to move from category interest to valuation support is to show three things together: a billable unit buyers can renew, expansion evidence post-deployment, and a gross margin trajectory that improves as usage scales.

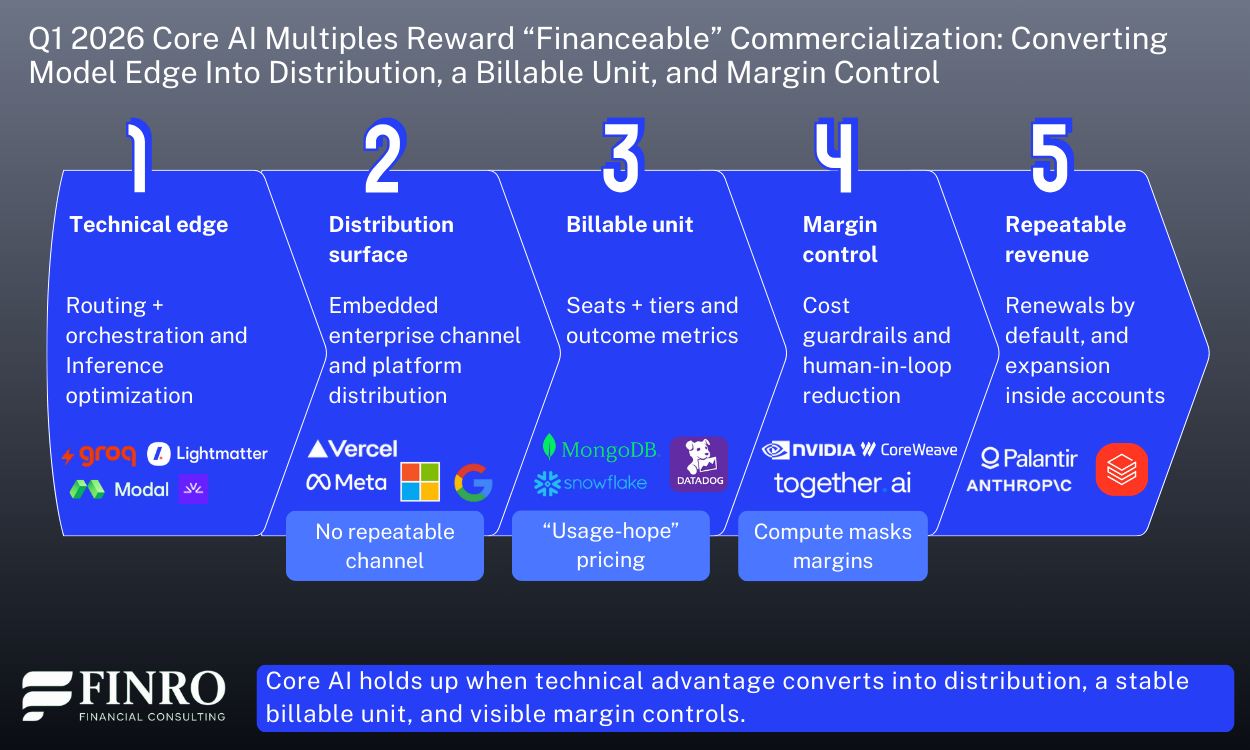

Core AI vs. Applied AI: valuation follows distribution and monetization, not “model sophistication”

Core AI and Applied AI did not converge in Q1 2026. If anything, the separation became easier to explain.

Core AI remains strategically important, but it carries two valuation headwinds that show up repeatedly across comps, rounds, and M&A:

Differentiation is harder to defend over time. Model capability diffuses, open alternatives improve, and switching costs can be limited.

Monetization is structurally constrained by compute intensity and pricing pressure, especially when the product is sold as a broad platform rather than tied to a defined workflow.

Applied AI is not “easier,” but it is often easier to underwrite because it can attach to distribution, to budgets, and to operational outcomes.

Core AI sits in the foundation layer, meaning models, training and inference infrastructure, developer tooling, and data systems. Applied AI sits on top, using that foundation to deliver a specific outcome inside a defined workflow or vertical. Core AI is judged more on performance, cost curve, and platform leverage. Applied AI is judged more on repeatability, retention, and ROI.

What the market rewarded inside Core AI?

In Q1 2026, Core AI outcomes were shaped less by model performance alone and more by who could monetize distribution and translate technical advantage into durable, repeatable revenue.

1) Distribution advantages that reduce go to market risk

The better supported valuation outcomes in Core AI were explained by access to customers and repeatable channels. Examples include embedded enterprise relationships, platform distribution, or a product surface that expands usage without a proportional increase in sales and support burden.

2) Clear unit economics despite compute

Investors showed less patience for margin opacity. The companies that held up best could explain gross margin drivers with specificity: routing, caching, inference optimization, pricing guardrails, and an operating plan that improves contribution margin as scale increases.

3) Business models that are not purely “usage hope”

Core AI offerings that anchored pricing to a stable billable unit tended to read as more financeable than narratives that depended on expanding token consumption without tight controls. Buyers want predictability, and investors want underwriting clarity.

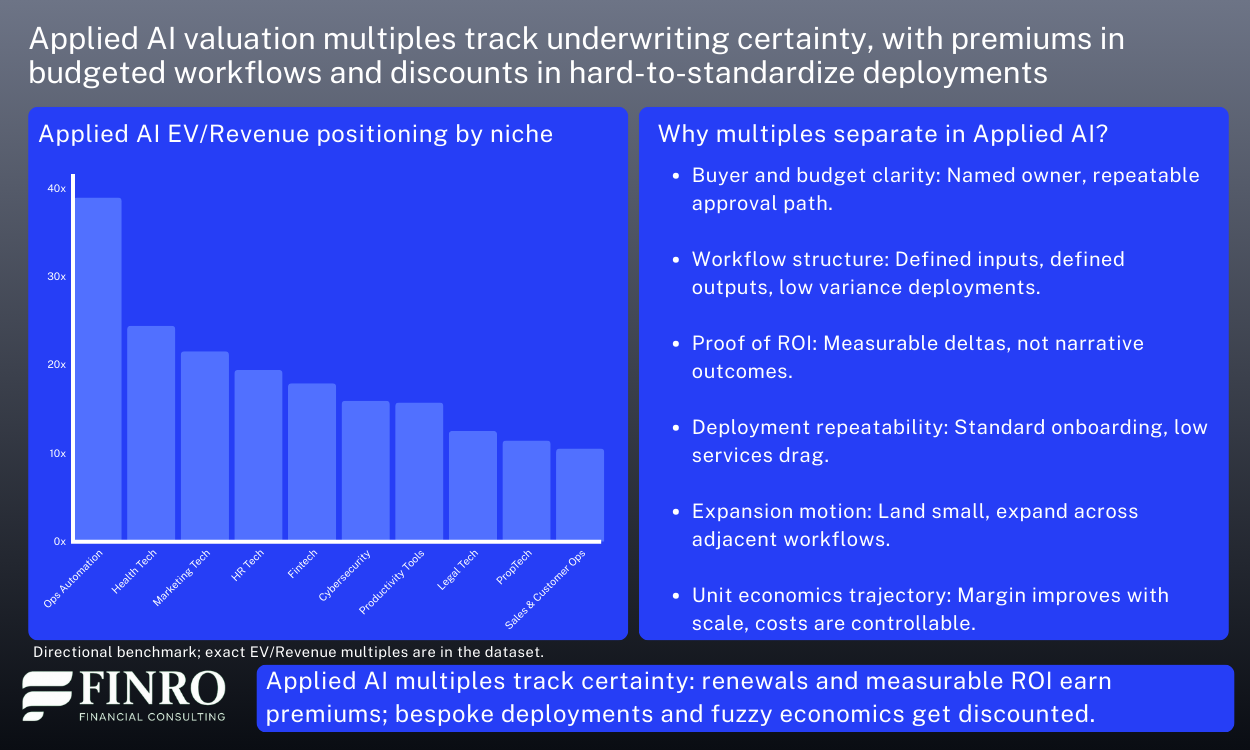

What the market rewarded inside Applied AI?

Applied AI cleared the bar more often when it embedded into a defined workflow with a buyer, a budget, and a renewal path. The premium showed up where AI was packaged as a product with measurable ROI, not as an experiment.

1) Vertical depth that creates defensible outcomes

Applied AI companies with workflow ownership, domain specific data advantages, and integration into the system of record tended to produce valuation support even when growth was not the fastest in the set. Depth translated into retention and expansion.

2) A procurement narrative that makes renewal the default

The strongest profiles sold into a budget line with clear ownership. When the buyer can renew as part of normal operations, multiples tend to be more resilient.

3) Expansion motion that compounds without heavy customization

Investors repeatedly looked for the same pattern: land with a narrow use case, then expand into adjacent workflows in the same department, then broaden across the org. Where expansion required services heavy tailoring, outcomes weakened.

The practical implication: “core vs. applied” is becoming a shorthand for underwriting certainty

“Core vs. Applied” is increasingly how investors compress a lot of diligence into one question: how predictable is commercialization. The re rating in Q1 suggests the market is paying less for technical ambition and more for proof that the business compounds.

In Q1 2026, this distinction was less about technology categories and more about underwriting.

Core AI tends to face more questions around durability and pricing power. Applied AI tends to face more questions around distribution scalability and retention. Dispersion widened because the market is pricing those questions more explicitly than it did a few quarters ago.

For founders, the translation is straightforward: whichever side of the stack you operate in, make the business legible in the terms investors are using right now. Distribution, billable unit, retention, and margin trajectory.

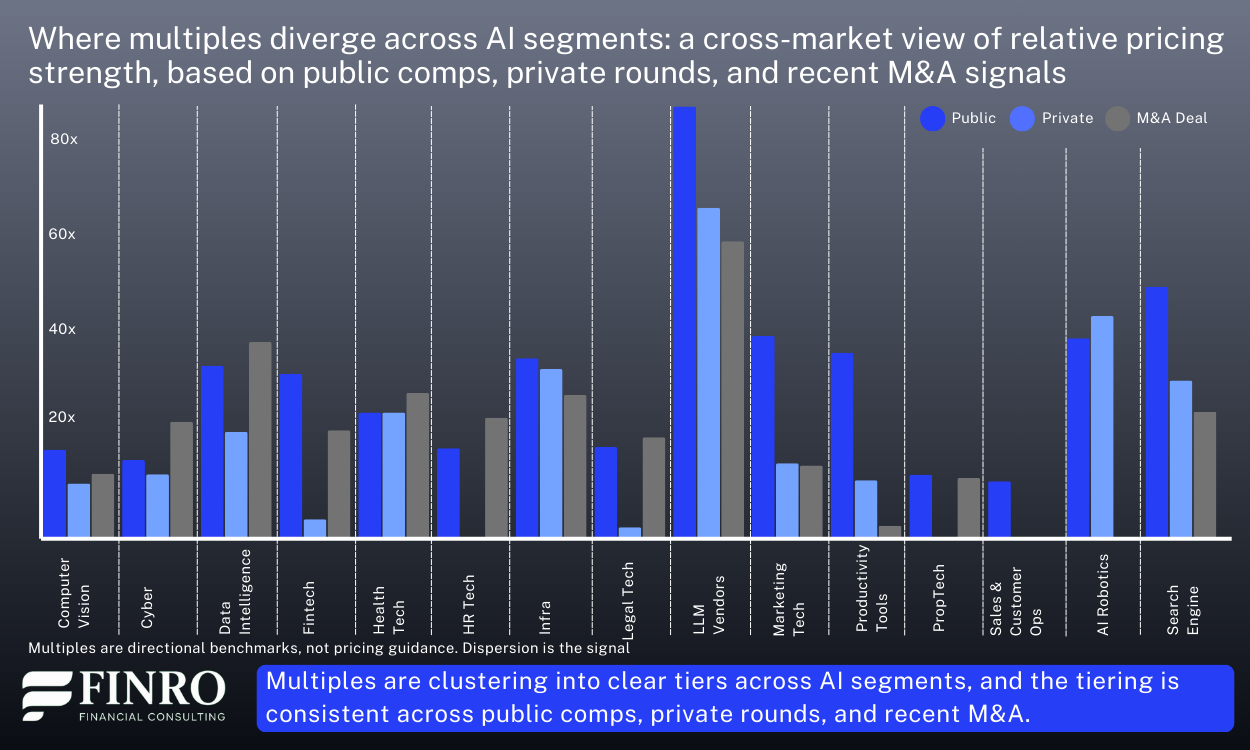

Where multiples diverge across AI segments?

So far, the pattern has been consistent: AI multiples in Q1 2026 did not move in one direction. They separated based on underwriting certainty.

Premium outcomes clustered around repeatable monetization, improving unit economics, and durable demand. Discounts hit companies where revenue is harder to forecast, compute still obscures margins, or growth still requires proportional spend.

This section makes that dispersion legible by combining two lenses: a practical niche taxonomy and cross-market triangulation.

A taxonomy that matches underwriting, not buzzwords

A taxonomy is only useful if it maps to how investors price risk. The goal is not to list every niche. It is to group the market by what drives valuation outcomes: where margin pressure comes from, how predictable the buying motion is, and whether deployments scale cleanly.

Your dataset taxonomy already does that across the stack, from foundation and infrastructure layers (Infrastructure, LLM Vendors) to data and retrieval layers (Data Intelligence, Search Engine) and workflow-facing applications (Cybersecurity, Fintech, Health Tech, Legal Tech, HR Tech, Marketing Tech, Sales & Customer Ops, Productivity Tools, PropTech, Computer Vision, AI Robotics).

This is also why the long niche list earlier can go. Readers do not need an index. They need the logic of why these groups clear differently.

Cross-market triangulation: how public, private, and M&A “vote” on quality

Valuation signals do not come from one market in isolation. Public comps, private rounds, and M&A each price risk differently. The useful signal is how they align.

Public markets are typically fastest to punish margin opacity and weak efficiency. When dispersion widens in public comps, it is usually because the market stops underwriting “future operating leverage” without evidence.

Private rounds can lag public repricing and still support premium outcomes, but dispersion inside private has become a clear test of “fundable quality.” Similar growth rates clear at very different outcomes depending on whether revenue is repeatable, margins have a believable path, and go-to-market is becoming more predictable rather than more bespoke.

M&A is the most pragmatic market. Strategic buyers tend to pay for assets they can integrate and scale: workflow ownership, distribution fit, and economics that hold under real usage. Where deployments look like bespoke services or compute costs remain uncontrolled, M&A benchmarks tend to be more conservative.

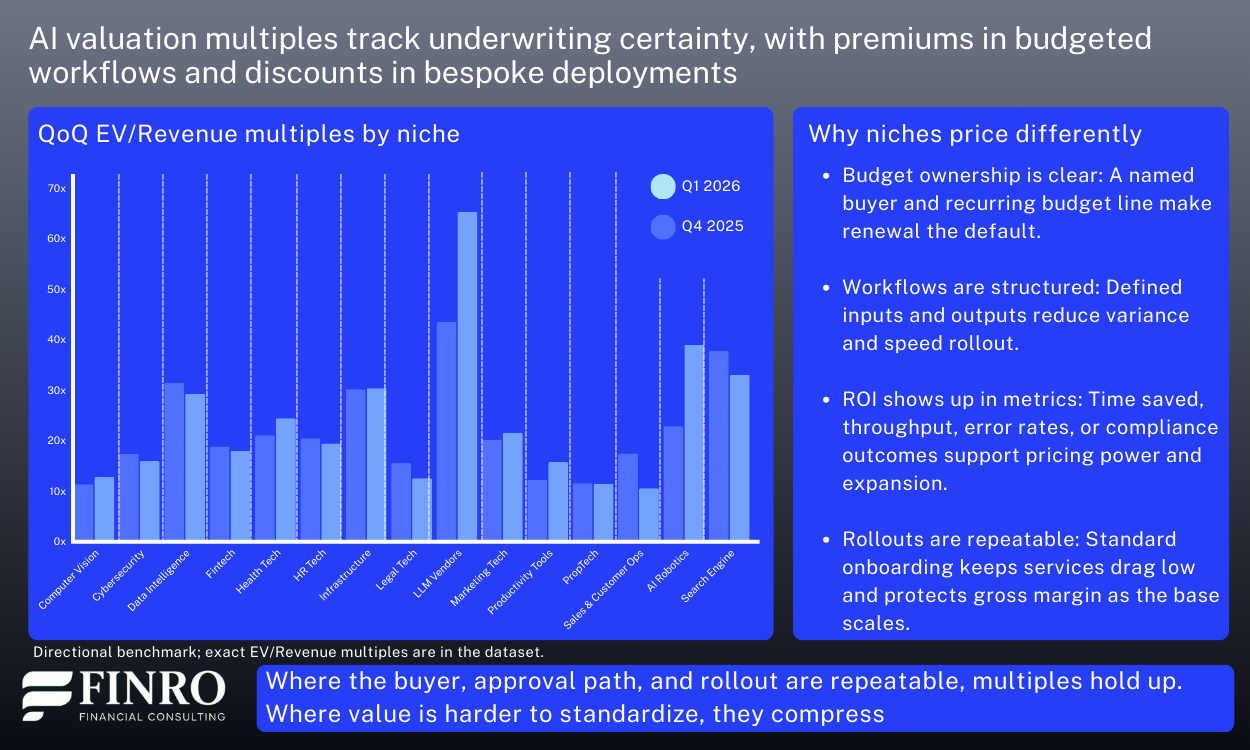

The Q1 shift: public repricing, M&A anchoring, private upside

A quarter-over-quarter view is useful precisely because it removes the “AI is up/down” framing. From Q4 2025 to Q1 2026, pricing did not move as one market. The direction depended on the lens. Public comps repriced more visibly, private rounds continued to support upside selectively, and M&A stayed the most grounded reference point.

At the aggregate level, the split is straightforward. Public multiples stepped up quarter over quarter, private moved modestly higher, and M&A was broadly stable. That combination fits a market where public comps are re-rating a narrower set of “bankable” exposure, private investors are still underwriting scarcity, and acquirers continue to price to ROI and integration reality.

Public repricing was concentrated, not broad. The clearest step-change showed up in LLM vendors, and health tech also moved meaningfully higher in public comps. Infrastructure held up with a modest increase, consistent with continued confidence in picks-and-shovels exposure. In contrast, data intelligence, marketing tech, and productivity tools compressed in public multiples, which aligns with categories where differentiation and pricing power can be harder to defend across the full peer set.

Private upside stayed alive, but became more selective. The most notable private increases came in marketing techand productivity tools, even as public comps in those areas did not move the same way. That pattern typically signals “winner pricing” in private markets for a handful of leaders, while public markets anchor the broader category more conservatively. Several other segments were flat or down in private marks, reinforcing that premiums are narrowing to profiles that can compound with improving economics.

M&A continued to anchor. Across most segments, deal multiples were stable quarter over quarter, which is exactly what you would expect from the most pragmatic filter. Buyers reprice slowly because acquisitions must clear integration cost and ROI, not narrative. Where M&A did move, it reinforced that the bar remains high: legal techcleared at lower levels, while cybersecurity continued to hold up.

Net, the QoQ view supports the same takeaway as the rest of this update. Dispersion is not noise. It is the market applying three different tests at once: public markets re-rate what looks defensible, private markets pay for scarce upside, and M&A enforces what is monetizable under real operating constraints.

Download the Q1 2026 AI multiples dataset

If you want to go deeper than directional charts and narrative signals, the full reference set is available as a downloadable dataset.

Finro’s Q1 2026 AI valuation multiples database consolidates public comps, private rounds, and recent M&A into one structure, organized so you can benchmark a company the way investors actually triangulate pricing.

It is not a “one-number” view of AI. It is segmented by niche, split by market type, and designed to show where pricing holds up across public, private, and deal reality.

The dataset includes:

A company-level table spanning public, private, and M&A observations, with consistent fields to support peer selection and filtering.

Core AI and Applied AI cuts, aligned to the same taxonomy used in this article.

Summary tabs by niche and by funding round, so you can sanity-check valuation ranges against stage and business model.

A quarter-over-quarter niche view (Q4 2025 vs Q1 2026) to see which segments repriced, which stayed anchored, and where dispersion widened.

Typical uses include: building a comps set for a fund memo, pressure-testing a round narrative against public anchors and deal clears, and explaining to a board why two “AI companies” with similar growth can land at very different outcomes once revenue quality, margins, and underwriting certainty are priced in.

Summary: Dispersion is the signal

AI valuation multiples in Q1 2026 did not “move.” They separated further.

Across public comps, private rounds, and M&A, the market continued to reward a narrower profile of AI companies: clear monetization, durable demand, and unit economics that improve with scale. The discounts concentrated where revenue remains harder to forecast, deployments stay bespoke, or margins are still effectively subsidized by compute and services.

Three cross-market patterns explain most outcomes:

First, underwriting shifted from promise to proof. The highest valuation support clustered around repeatable commercialization signals such as budgeted spend, renewal behavior, and expansion inside accounts. Where growth depended on pilots, incentives, or fragile demand, multiples compressed even if headline momentum looked strong.

Second, the public, private, and M&A “filters” are not saying the same thing. Public markets anchor what looks defensible at scale. Private rounds price upside when scarcity and category leadership feel plausible. M&A remains the pragmatic benchmark because it must clear integration reality and buyer ROI.

Third, the split between core and applied remains a useful shorthand for what investors are actually underwriting. Core AI can clear premium pricing when it becomes picks-and-shovels infrastructure others must build on and when unit economics are explainable. Applied AI clears best where it is embedded in budgeted workflows with measurable ROI and repeatable rollouts, while feature-grade tools price very differently.

Net, there is no single “AI multiple.” Q1 2026 valuation outcomes are being set by where a company sits in the stack, how scarce and defensible that position is, and how cleanly revenue converts into durable economics.

Key Takeaways

Multiples diverged sharply as investors repriced revenue quality, not category hype.

Monetization clarity, improving unit economics, and durable retention drove premium outcomes.

Agentic AI rewarded repeatable deployment, controlled pricing, and post-pilot expansion; demos and bespoke rollouts discounted.

Core AI valued for distribution and picks-and-shovels leverage; Applied AI valued for workflow ownership, budgets, and renewability.

Public anchors set baseline, private rounds price scarcity-driven upside, and M&A validates what is truly monetizable.

Answers to The Most Asked Questions

-

They are highly dispersed. Premium outcomes go to contracted, repeatable revenue with improving unit economics, while “growth now, model later” profiles are repriced downward.

-

Investors tightened underwriting. Proof of renewability, margin trajectory once compute is fully loaded, and efficient scaling matter more than headline growth or category buzz.

-

Agents earn better pricing when packaged for procurement, deploy repeatably in narrow workflows, expand inside accounts, and show improving contribution margins as orchestration and support costs fall.

-

Core AI is valued on distribution leverage and infrastructure “rails.” Applied AI is valued on workflow ownership, budgeted renewals, measurable ROI, and expansion across adjacent processes.

-

Use triangulation. Public comps anchor defensibility, private rounds price scarcity and upside, and M&A is the pragmatic filter that validates what a buyer can monetize and integrate.